Machine Learning For Key Generation

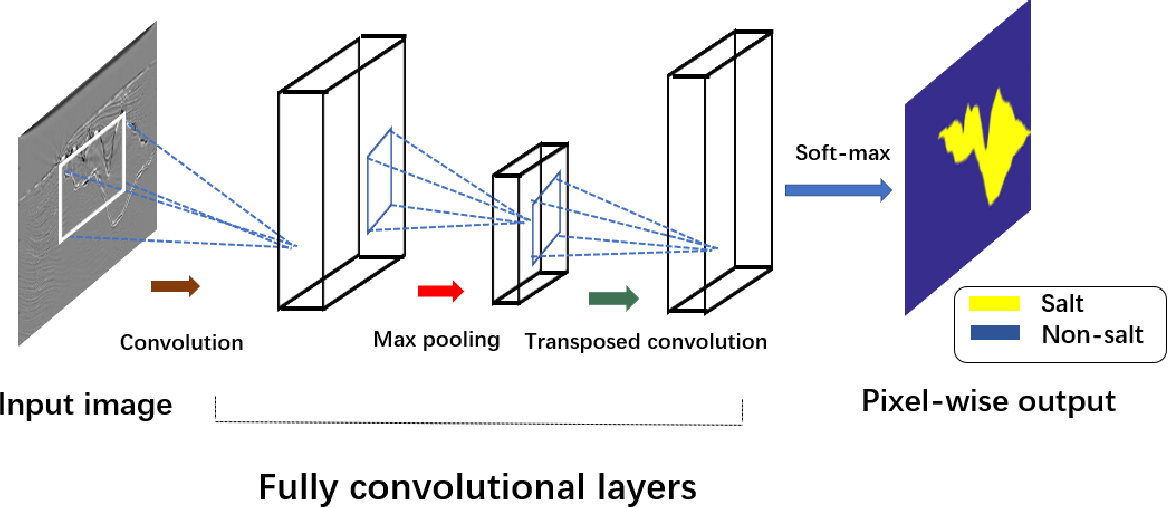

Digital watermarking became a key technology for protecting copyrights. In this paper, we propose a method of key generation scheme for static visual digital watermarking by using machine learning technology, neural network as its exemplary approach for machine learning method. Briefly, for a problem of interest—say, the identification and annotation of genes in a newly sequenced genome—a machine-learning algorithm will learn key properties of existing annotated genomes, such as what constitutes a transcriptional start site and specific genomic properties of genes such as GC content and codon usage, and will then use this knowledge to generate a model for finding genes given all of the genomic sequences on which it was trained.

- Machine Learning For Key Generation Kids

- Machine Learning For Key Generation Youtube

- Machine Learning For Key Generation 2017

Candidate generation is the first stage of recommendation. Given a query, thesystem generates a set of relevant candidates. The following table shows twocommon candidate generation approaches:

Windows 8.1 Product Key Generator is another software created by the Microsoft for the activating of Windows 8.1 OS. Since the product key is very important in activating this software, they had to develop a means of getting it. Many people have been asking for it and it came out at the right time. Mar 26, 2020 Windows 8.1 Product Key Generator 2020 Full Cracked Windows 8.1 full ready to download, is an update of the Windows 8 operating system. This project was a change from the traditional Microsoft update policy. Windows 8.1 Product Key Generator can utilize to make the activator key like the Microsoft in the product key and can utilize most of the recent version. In contrast, users can use the utility in exceptionally in the mainstream since the user can use the inclusive kinds of methods. Dec 09, 2019 Windows 8.1 Enterprise; How to Use Product Key For Windows 8.1. Download the Windows 8.1 key generator; Run the application; Get the key and add it to your Windows 8.1; Author’s Note: If you are experiencing limitations and annoying issues due to non-activation of Windows 8.1, you need this key generating utility to activate your copy of Windows 8.1. Mar 10, 2020 Windows 8.1 Product Key Generator works by utilizing the Key Management System. With a specific end goal to live away from sadness, everybody has to make use of Windows Activator. With a specific end goal to live away from sadness, everybody has to make use of Windows Activator. Windows 8.1 pro activation key.

| Type | Definition | Example |

|---|---|---|

| content-based filtering | Uses similarity between items to recommend items similar to what the user likes. | If user A watches two cute cat videos, then the system can recommend cute animal videos to that user. |

| collaborative filtering | Uses similarities between queries and items simultaneously to provide recommendations. | If user A is similar to user B, and user B likes video 1, then the system can recommend video 1 to user A (even if user A hasn’t seen any videos similar to video 1). |

Machine Learning For Key Generation Kids

Embedding Space

Both content-based and collaborative filtering map each item and each query(or context) to an embedding vector in a common embedding space(E = mathbb R^d). Typically, the embedding space is low-dimensional(that is, (d) is much smaller than the size of the corpus), and capturessome latent structure of the item or query set. Similar items, such as YouTubevideos that are usually watched by the same user, end up close together in theembedding space. The notion of 'closeness' is defined by a similarity measure.

Extra Resource:projector.tensorflow.org isan interactive tool to visualize embeddings.Similarity Measures

A similarity measure is a function (s : E times E to mathbb R) thattakes a pair of embeddings and returns a scalar measuring their similarity.The embeddings can be used for candidate generation as follows: given aquery embedding (q in E), the system looks for item embeddings(x in E) that are close to (q), that is, embeddings with highsimilarity (s(q, x)).

To determine the degree of similarity, most recommendation systems relyon one or more of the following:

- cosine

- dot product

- Euclidean distance

Cosine

This is simply the cosine of the angle between the twovectors, (s(q, x) = cos(q, x))

Dot Product

The dot product of two vectors is(s(q, x) = langle q, x rangle = sum_{i = 1}^d q_i x_i).It is also given by (s(q, x) = x q cos(q, x)) (the cosine of theangle multiplied by the product of norms). Thus, if the embeddings arenormalized, then dot-product and cosine coincide.

Euclidean distance

This is the usual distance in Euclideanspace, (s(q, x) = q - x = left[ sum_{i = 1}^d (q_i - x_i)^2right]^{frac{1}{2}}).A smaller distance means higher similarity. Note that when the embeddingsare normalized, the squared Euclidean distance coincides with dot-product(and cosine) up to a constant, since in thatcase (frac{1}{2} q - x ^2 = 1 - langle q, x rangle).

Comparing Similarity Measures

Machine Learning For Key Generation Youtube

Consider the example in the figure to the right. The black vector illustrates thequery embedding. The other three embedding vectors (Item A, Item B, Item C)represent candidate items. Depending on the similarity measure used, theranking of the items can be different.

Using the image, try to determine the item ranking using all three of thesimilarity measures: cosine, dot product, and Euclidean distance.

Answer Key

How did you do?

Tron evolution pc serial key generator. Does any of you have any experince with copying a SafeDisc game? Or know how to run SafeDisc game on Windows 10?Thank you in advance!.

Item A has the largest norm, and is ranked higher according to the dot-product. Item C has the smallest angle with the query, and is thus ranked first according to the cosine similarity. Item B is physically closest to the query so Euclidean distance favors it.

Which Similarity Measure to Choose?

Machine Learning For Key Generation 2017

Compared to the cosine, the dot product similarity is sensitive tothe norm of the embedding. That is, the larger the norm of anembedding, the higher the similarity (for items with an acute angle)and the more likely the item is to be recommended. This can affectrecommendations as follows:

Items that appear very frequently in the training set (for example,popular YouTube videos) tend to have embeddings with large norms.If capturing popularity information is desirable, then you shouldprefer dot product. However, if you're not careful, the popularitems may end up dominating the recommendations. In practice, youcan use other variants of similarity measures that put less emphasison the norm of the item. For example, define(s(q, x) = q ^alpha x ^alpha cos(q, x)) forsome (alpha in (0, 1)).

Items that appear very rarely may not be updated frequently duringtraining. Consequently, if they are initialized with a large norm, thesystem may recommend rare items over more relevant items. To avoid thisproblem, be careful about embedding initialization, and use appropriateregularization. We will detail this problem in the first exercise.